This project guides you through setting up a working ML video image classification system using the ROCK 4SE and the Coral USB Accelerator with a USB webcam.

Author: Peter Milne, engineer and Linux advocate with more SBCs than an Apollo 11 landing craft.

The example uses a pre-trained bird classification model that can recognise over 900 different American and European bird species. A Python script controls a USB camera and image processing pipeline for the model inferencing.

The principles used are similar to any image classification system and can be applied, for example, to applications like video surveillance, traffic management and building entry security.

Although the project uses advanced ML techniques, you should be able to follow the steps if this is your first video image classification experience.

We cover setting up the ROCK 4SE operating system and USB Camera, adding the Coral USB Accelerator and supporting software.

If you want to dig deeper into how the system works, the Python code is Open Source. We also provide links to the excellent Coral documentation, where you can learn all about re-training the ML model with your captured images to take the project to the next level.

—

Credit: Google

Licence: Apache Licence, Version 2.0, January 2004

Step 1: OS install

This project uses the official Debian Desktop OS for the ROCK 4SE. We tested with this version from the Radxa Debos releases repo.

- Download the image and save it in a directory on your host computer:

- Flash a 32GB or larger SD card using Balena Etcher.

- Insert the SD card into the ROCK 4 and connect a monitor, mouse and keyboard. Do not connect the Coral USB Accelerator yet.

- Power up the ROCK 4 SE and set up your OS in the usual way – discover the Getting Started guide with 4SE.

Step 2: Camera setup

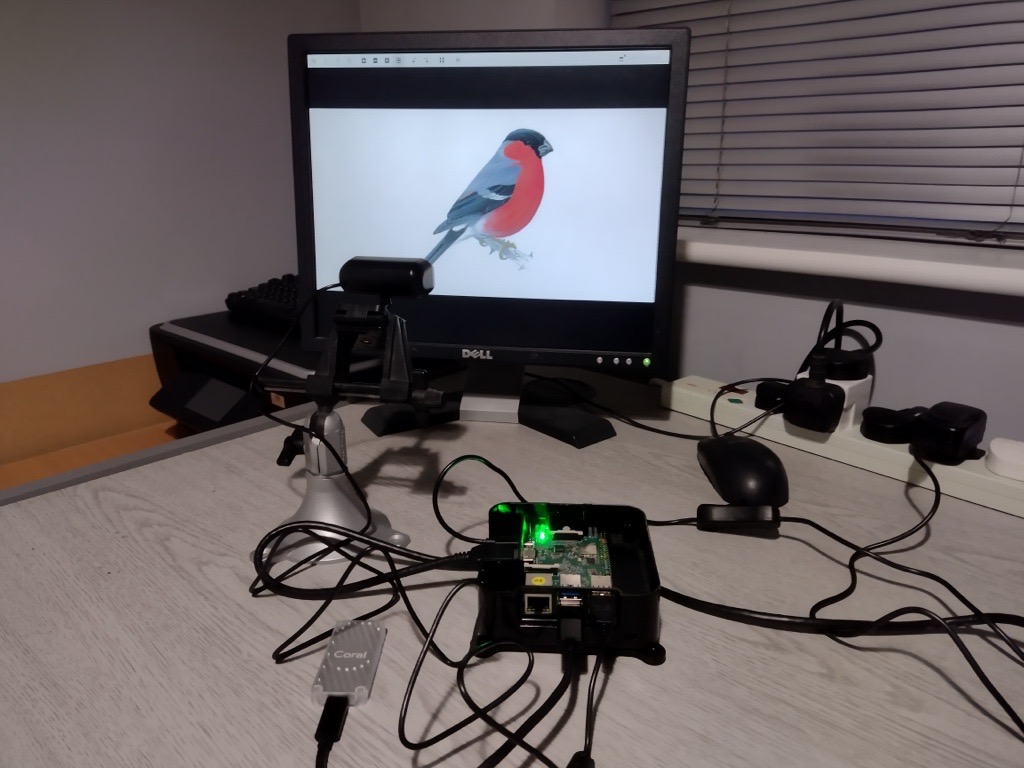

We attached the USB camera to the ROCK 4SE and mounted it on a stand in front of a 16” monitor displaying images of birds. We setup a Linux PC using the Eye of GNOME graphics viewer program displaying a slideshow of random bird images for testing the ML model but any images of birds should work well.

https://packages.debian.org/bullseye/eog

The HDMI output from the ROCK was attached to another monitor displaying the Bullseye desktop.

The Coral USB module is attached to the ROCK via the lower USB 3.0 port.

During testing, each bird picture is shown for 5 seconds, while the video pipeline analyses each frame using the image classification model. If a bird is recognised, it displays the likely bird classification and confidence level in the Terminal, with the video stream being visible in a separate window.

Step 3: Edge TPU runtime

Follow the next few steps using the command line in Terminal to install support for the Coral USB Accelerator and ML components.

Edge TPU runtime provides a software library that runs on the ROCK 4SE and allows it to interact with the Coral UBS Accelerator to run lightning fast ML applications.

- Enter the following command (all on one line) into the Terminal

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list- Add the key signature to the repository (don’t forget the “-” character at the end!):

wget -O- https://packages.cloud.google.com/apt/doc/apt-key.gpg | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/coral-archive-keyring.gpg- Update the system repo index

sudo apt update- Now install the latest Edge TPU runtime

sudo apt-get install libedgetpu1-stdStep 4: Coral USB Accelerator

Now it’s time to connect the Coral USB Accelerator to the ROCK 4:

- Using the USB-C cable supplied, attach one end to the Coral

- Attach the other end to one of the Blue USB 3.0 ports on the ROCK 4SE, which allows the fastest transfer speeds.

Tip: If you’ve already attached the Coral before this step, detach and reattach it.

Step 5: PyCoral library

Google provides a custom Python library for Coral called PyCoral. It’s built on the Open Source TensorFlow Lite and designed to speed up development and provide extra functionality for the Coral USB Accelerator.

- Install PyCoral by entering the following command in the Terminal

sudo apt install python3-pycoralStep 6: Birdcam code

Now the ROCK 4, Coral USB Accelerator and Camera are all setup, the Python code to run the Birdcam can be installed. It has been adapted from the Coral example code provided by Google using a pre-trained ML bird classification model compiled to run on Coral.

The model can identify over 900 bird species and allows the creation of a working system quickly and easily without having to build your own ML model.

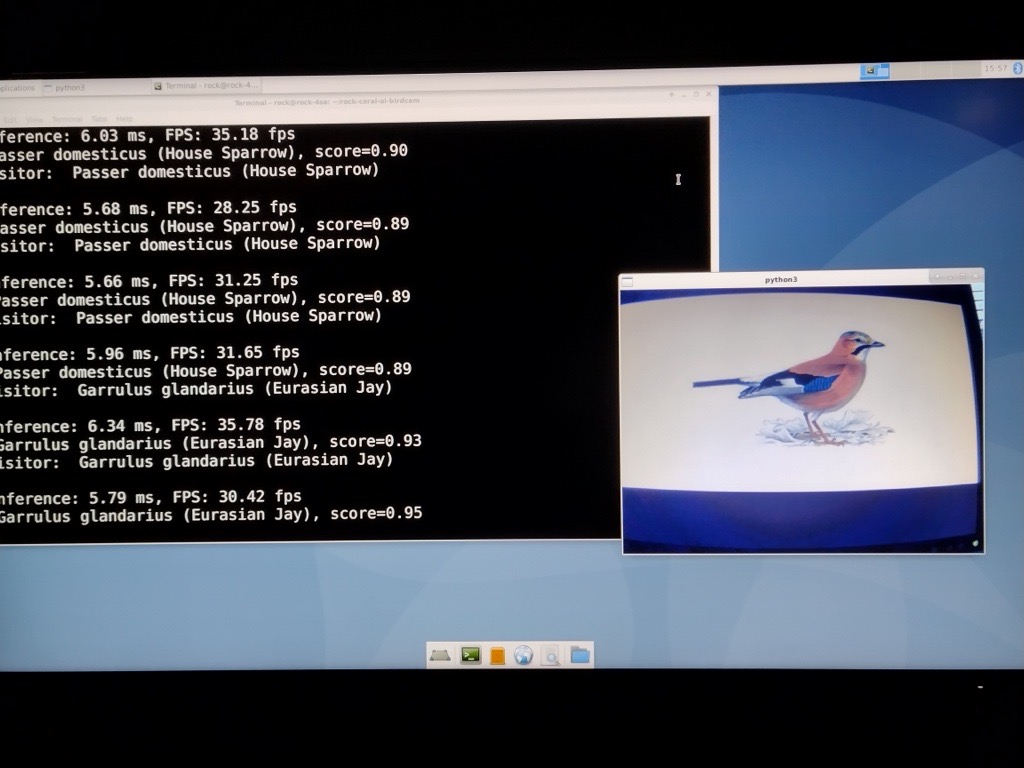

A video processing pipeline is set up running at 30 frames per second (fps). Each frame is analysed by Coral using its ML classification model and an inference score shows how likely any matches are to the set of labels for the model.

- Install git

sudo apt install git- Clone the Birdcam code from the OKdo Github repo with the following commands:

cd ~

git clone https://github.com/LetsOKdo/rock-coral-ai-birdcam.git

cd ~/rock-coral-ai-birdcam- Install the software dependencies

bash birdcam/install_requirements.shThe software will start up with the inference text appearing in the Terminal and another window will open displaying the camera video feed. Whenever a bird is identified, the inference score and the label are displayed in the terminal.

- Use CTRL-C to stop the birdcam application

Step 7: Testing

To test Birdcam was working we started Birdcam and showed it random images of birds displayed on our host computer.

Birdcam was fairly accurate with its identification of common bird species giving us confidence that it would work when relocated outside.

This video shows the birdcam in action – you should be able to see the name of the bird and the confidence score in the Terminal window along with the video feed from the camera. It really is quite amazingly accurate!

Summary

This guide has shown how to set up an ML image classification model that can identify bird species using a video camera feed on the ROCK 4SE and Coral USB Accelerator.

The ML model has been pre-trained to recognise over 900 species of birds and we successfully tested the system using images of birds displayed for the camera.

To improve the classification accuracy the model can be further trained using the images captured by your camera. Details of how to do this with Coral can be found here.

The original Coral example project can be found here.

![]()

Let’s invent the future together

What’s your challenge? From augmented reality to machine learning and automation, send us your questions, problems or ideas… We have the solution to help you design the world. Get in touch today.

Looking for something else? Discover our Blogs, Getting Started Guides and Projects for more inspiration!

Like what you read? Why not show your appreciation by giving some love.

From a quick tap to smashing that love button and show how much you enjoyed this project.